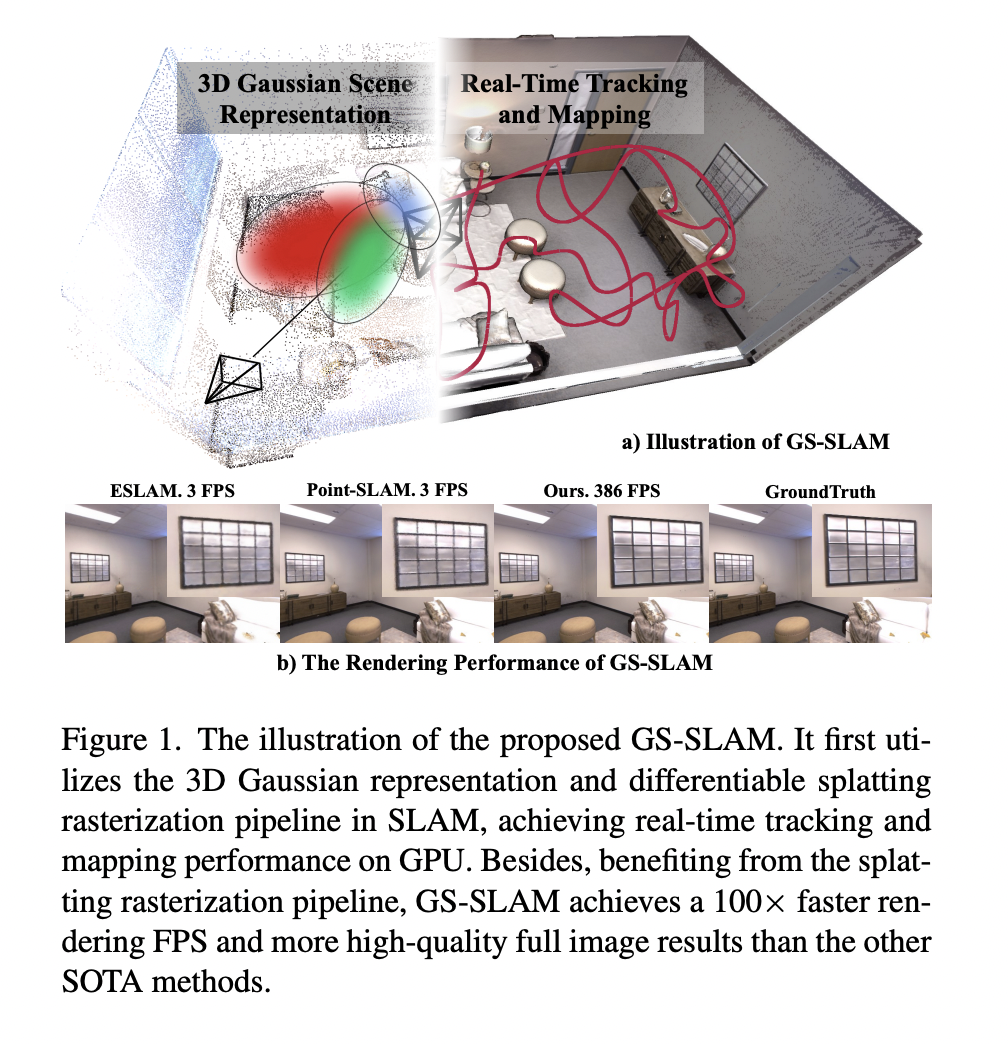

Researchers from Shanghai AI Laboratory, Fudan University, Northwestern Polytechnical University, and The Hong Kong University of Science and Technology have collaborated to develop a 3D Gaussian representation-based Simultaneous Localization and Mapping (SLAM) system named GS-SLAM. The goal of the plan is to achieve a balance between accuracy and efficiency. GS-SLAM uses a real-time differentiable splatting…