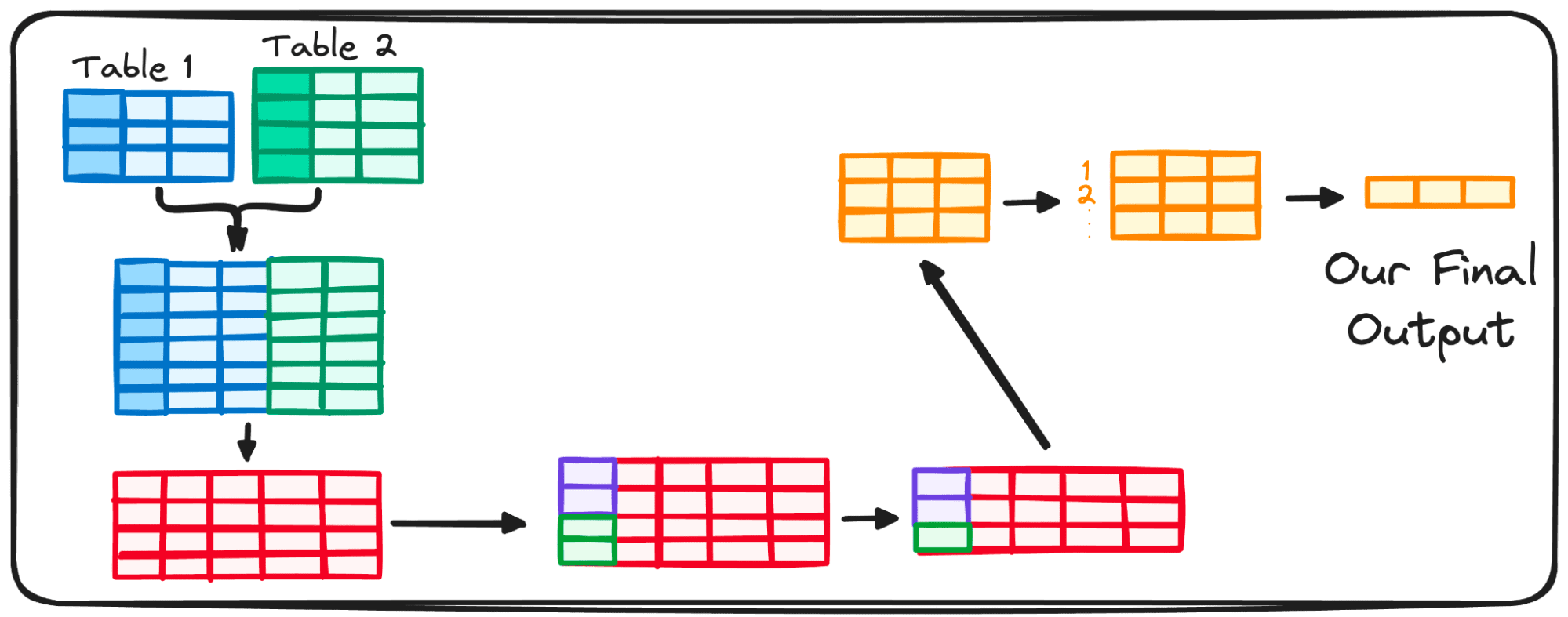

Image by Author

SQL has become a must-have language for any data professional.

Most of us use SQL in our daily work, and after writing many queries, we all get our own style and have our habits, both good and bad.

SQL is usually learned by use, and in most cases, people do…