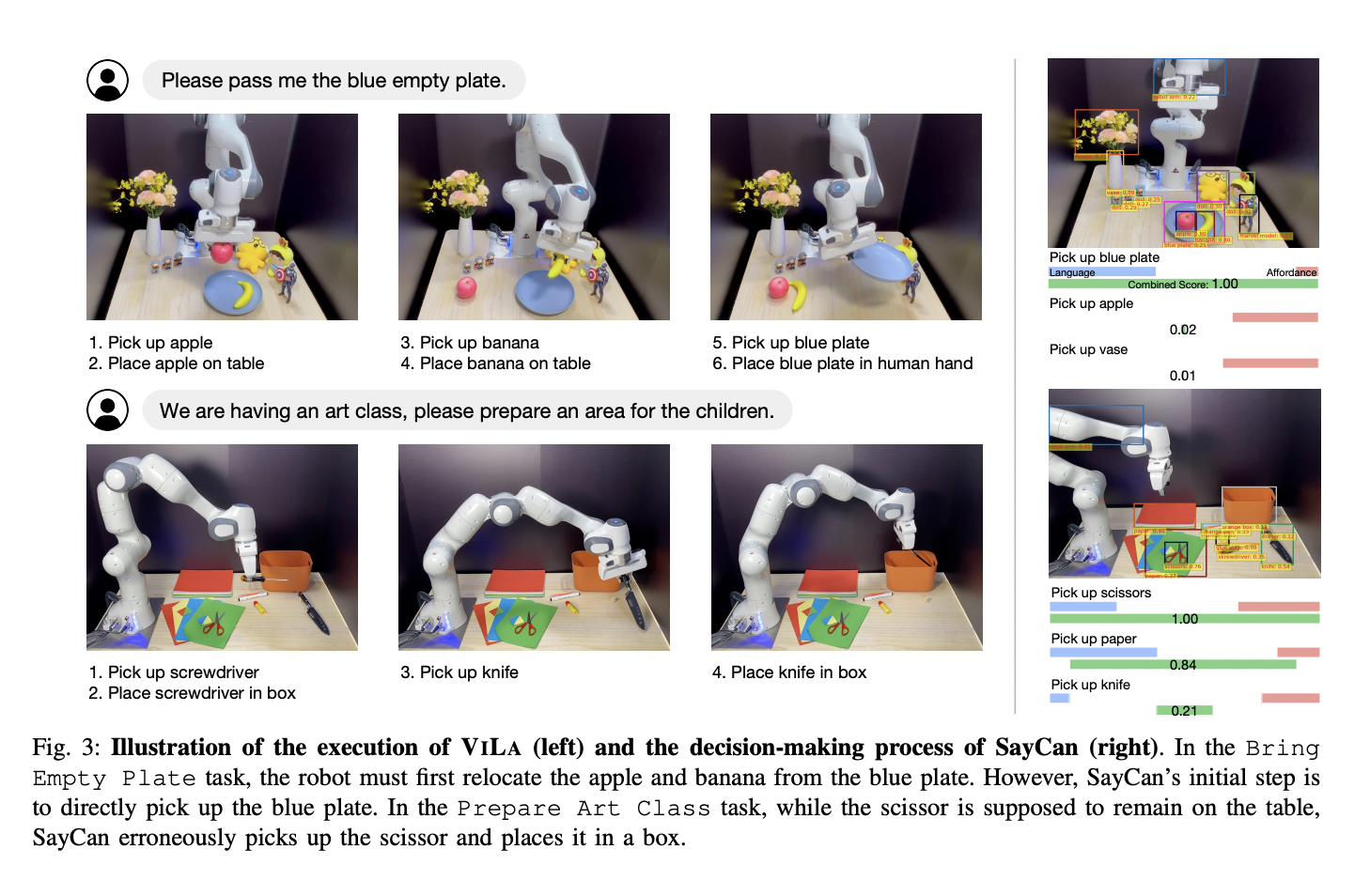

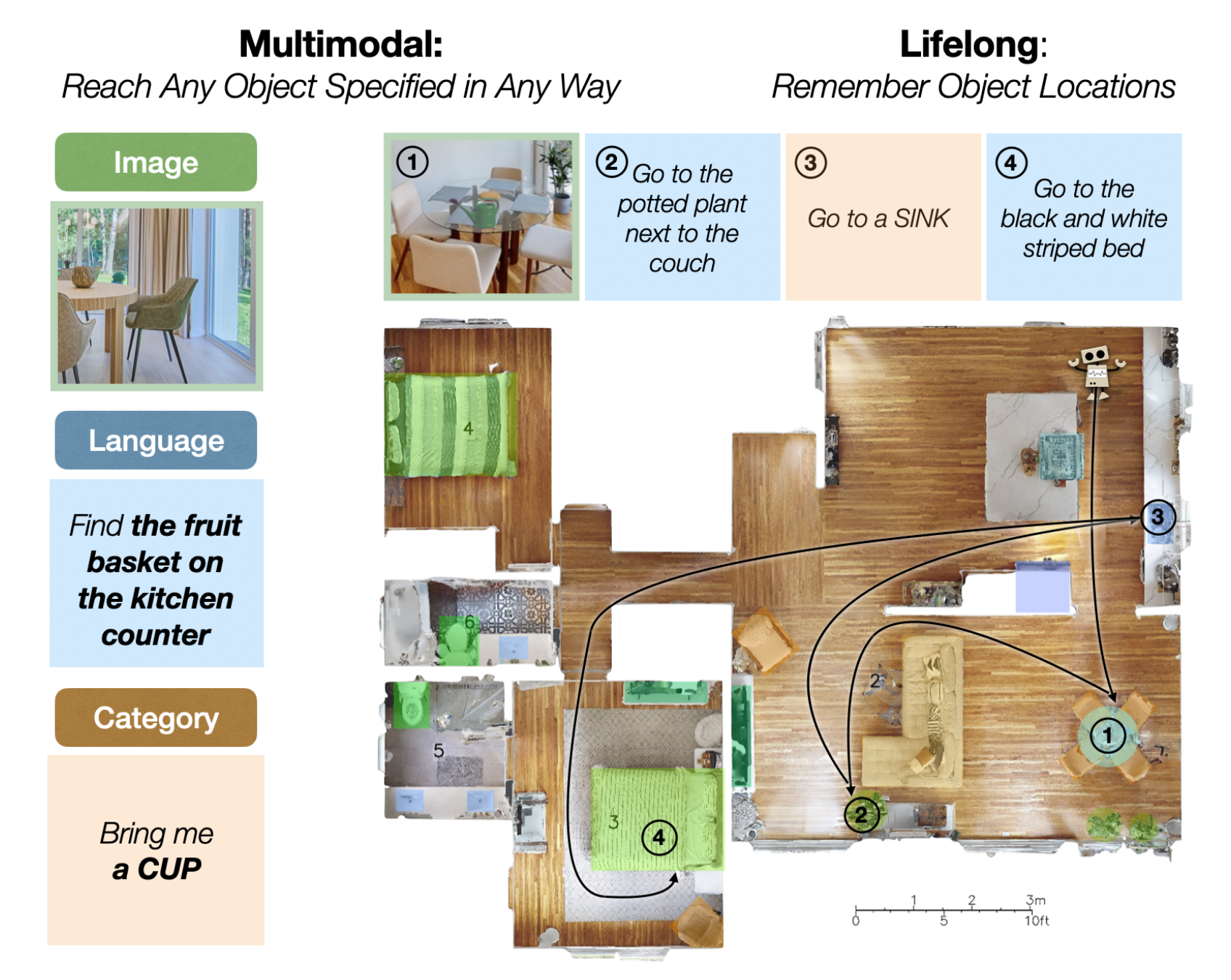

The problem of achieving superior performance in robotic task planning has been addressed by researchers from Tsinghua University, Shanghai Artificial Intelligence Laboratory, and Shanghai Qi Zhi Institute by introducing Vision-Language Planning (VILA). VILA integrates vision and language understanding, using GPT-4V to encode profound semantic knowledge and solve complex planning problems, even in zero-shot scenarios. This…