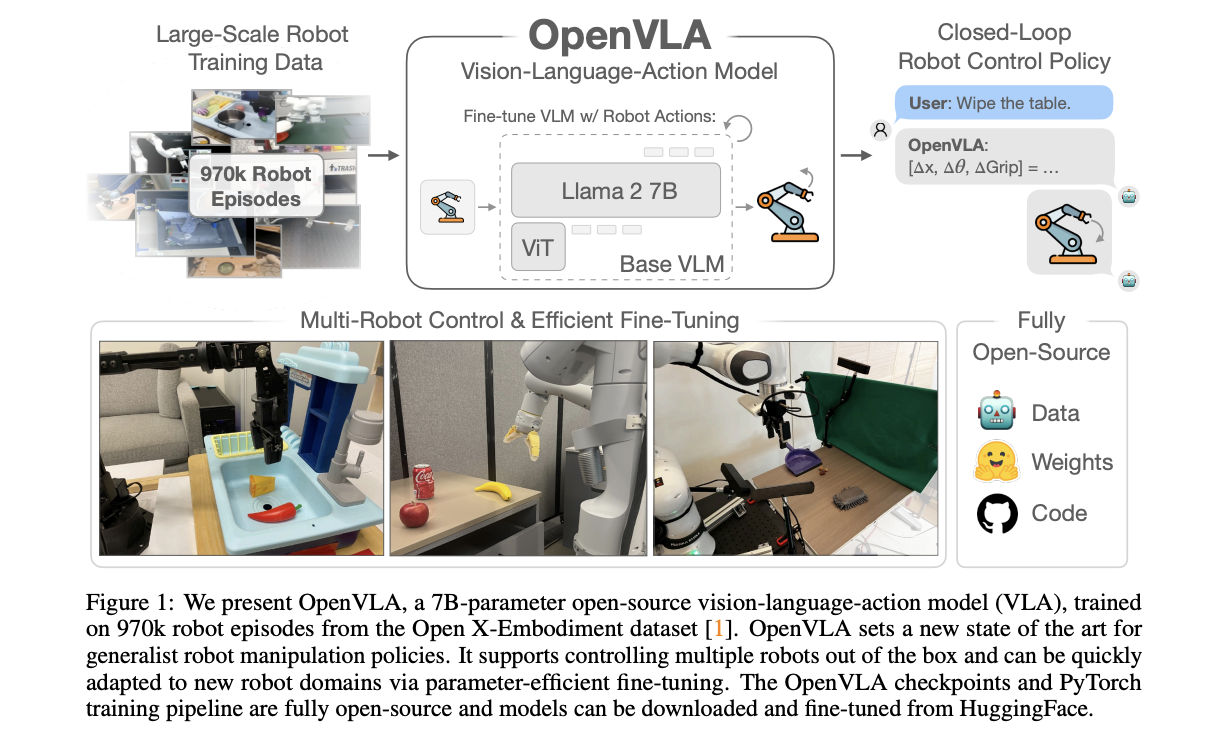

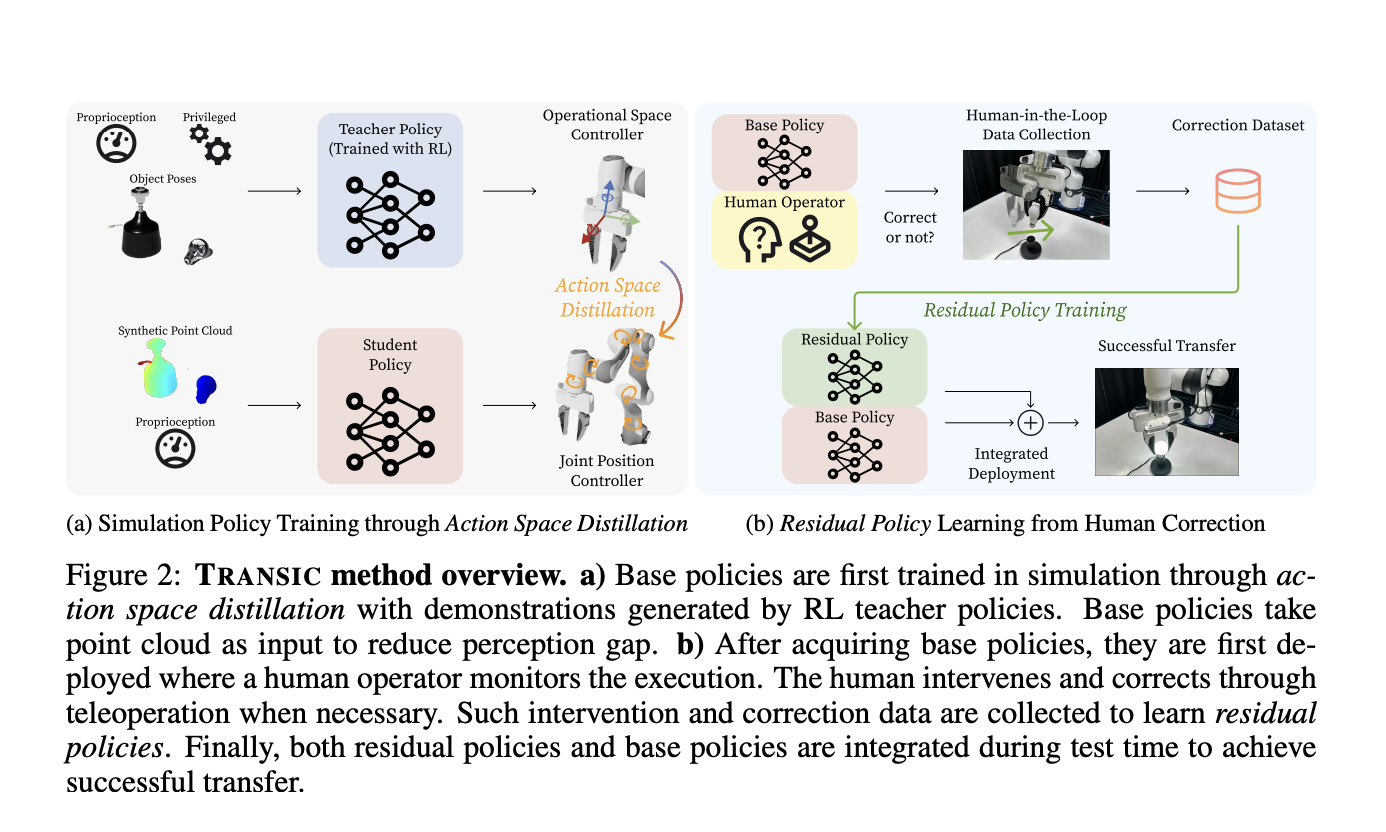

Robots are usually unsuitable for altering different tasks and environments. General-purpose models of robots are devised to circumvent this problem. They allow fine-tuning these general-purpose models for a wide scope of robotic tasks. However, it is challenging to maintain the consistency of shared open resources across various platforms. Success in real-world environments is far from…