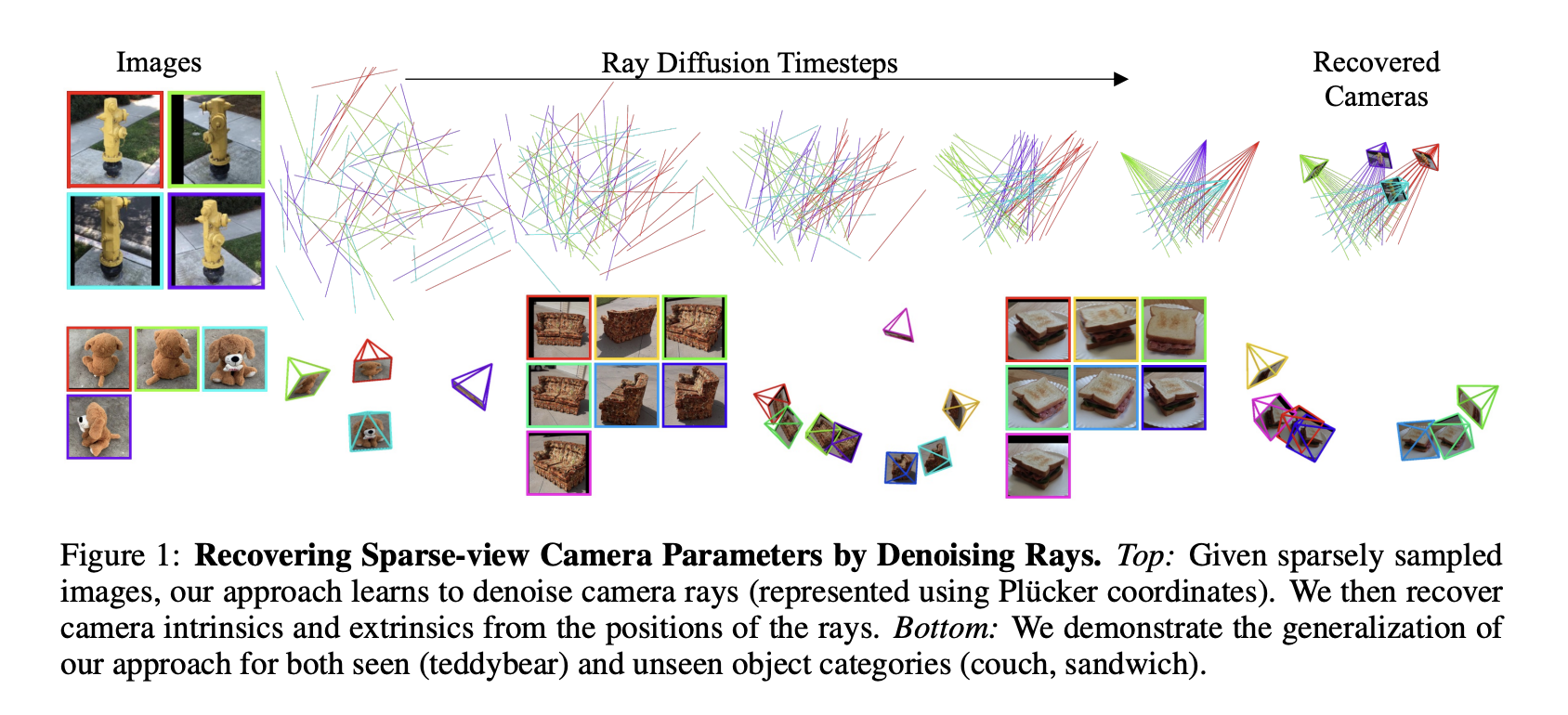

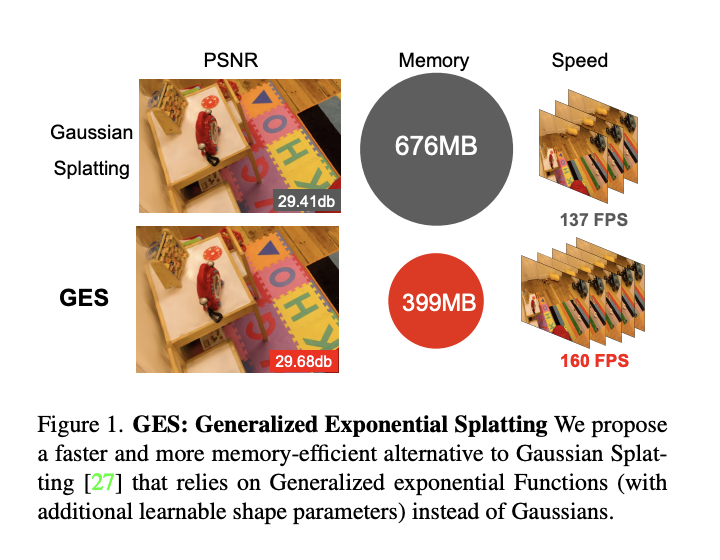

The pursuit of high-fidelity 3D representations from sparse images has seen considerable advancements, yet the challenge of accurately determining camera poses remains a significant hurdle. Traditional structure-from-motion methods often falter when faced with limited views, prompting a shift towards learning-based strategies that aim to predict camera poses from a sparse image set. These innovative approaches…