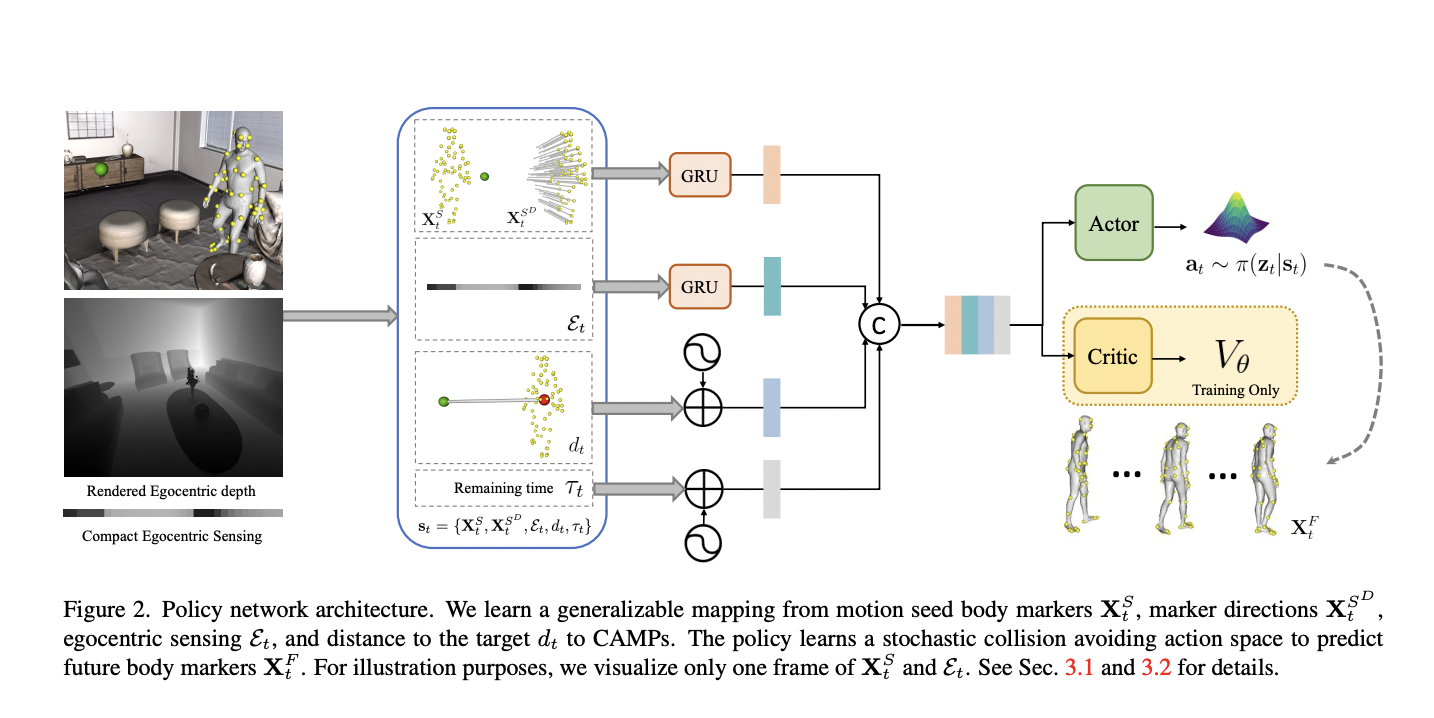

Understanding the world from a first-person perspective is essential in Augmented Reality (AR), as it introduces unique challenges and significant visual transformations compared to third-person views. While synthetic data has greatly benefited vision models in third-person views, its utilization in tasks involving embodied egocentric perception still needs to be explored. A major obstacle in this…