Image by Author

In this blog post, we will review a famous educational GitHub repository with 24K ⭐ stars. This repository provides a structure to help you master Large Language Models (LLMs) for free. We will be discussing the course structure, Jupyter notebooks that contain code examples, and articles that cover the latest LLM developments.

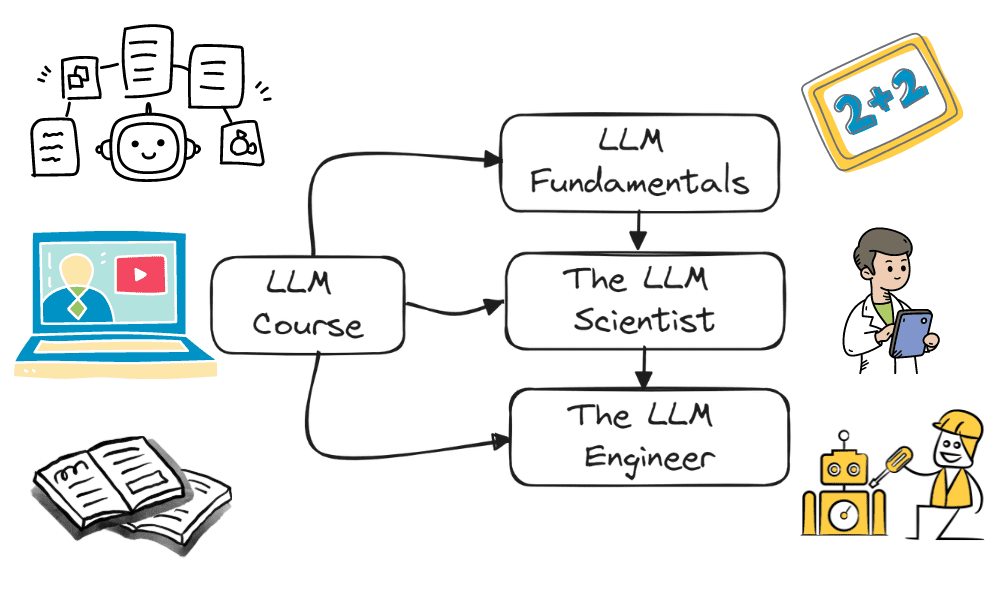

The Large Language Model Course is a comprehensive program designed to equip learners with the necessary skills and knowledge to excel in the rapidly evolving field of large language models. It consists of three core parts covering fundamental and advanced tools and concepts. Each core section contains multiple topics that come with YouTube tutorials, guides, and resources that are freely available online.

The LLM course is a helpful guide that provides a structured way of learning by providing freely available resources, tutorials, videos, notebooks, and articles at one place. Even if you are a complete beginner, you can start with the fundamentals section and learn about algorithms and technical and various tools to solve simple natural language and machine learning problems.

The course is divided into three main parts, each focusing on a different aspect of LLM expertise:

LLM Fundamentals

This foundational part addresses the essential knowledge required for understanding and working with LLMs. It covers mathematics, Python programming, the basics of neural networks, and natural language processing. For anyone looking to get into machine learning or deepen their understanding of its mathematical underpinnings, this section is invaluable. The resources provided, from 3Blue1Brown’s engaging video series to Khan Academy’s comprehensive courses, offer a variety of learning paths suitable for different learning styles.

Topics Covered:

- Mathematics for Machine Learning

- Python for Machine Learning

- Neural Networks

- Natural Language Processing (NLP)

The LLM Scientist

This LLM Scientist guide is designed for individuals who are interested in developing cutting-edge LLMs. It covers the architecture of LLMs, including Transformer and GPT models, and delves into advanced topics such as quantization, attention mechanisms, fine-tuning, and RLHF. The guide explains each topic in detail and provides tutorials and various resources to solidify the concepts. The whole concept is to learn by building.

Topics Covered:

- The LLM architecture

- Building an instruction dataset

- Pre-training models

- Supervised Fine-Tuning

- Reinforcement Learning from Human Feedback

- Evaluation

- Quantization

- New Trends

The LLM Engineer

This part of the course focuses on the practical application of LLMs. It will guide learners through the process of creating LLM-based applications and deploying them. The topics covered include running LLMs, building vector databases for retrieval-augmented generation, advanced RAG techniques, inference optimization, and deployment strategies. During this part of the course, you will learn about the LangChain framework and Pinecone for vector databases, which are essential for integrating and deploying LLM solutions.

Topics Covered:

- Running LLMs

- Building a Vector Storage

- Retrieval Augmented Generation

- Advanced RAG

- Inference optimization

- Deploying LLMs

- Securing LLMs

Building, fine-tuning, inferring, and deploying models can be quite complex, requiring knowledge of various tools and careful attention to GPU memory and RAM usage. This is where the course offers a comprehensive collection of notebooks and articles that can serve as useful references for implementing the concepts discussed.

Notebooks and Articles on:

- Tools: It covers tools for automatically evaluating your LLMs, merging models, quantizing LLMs in GGUF format, and visualizing merge models.

- Fine-tuning: It provides a Google Colab notebook for step-by-step guides on fine-tuning models like Llama 2 and using advanced techniques for performance enhancement.

- Quantization: The quantization notebooks deeply dive into optimizing LLMs for efficiency using 4-bit GPTQ and GGUF quantization methodologies.

Whether you’re a beginner seeking to understand the basics or a seasoned practitioner looking to stay current with the latest research and applications, the LLM course is an excellent resource for delving deeper into the world of LLMs. It provides a wide range of freely available resources, tutorials, videos, notebooks, and articles all in one place. The course covers all aspects of LLMs, from theoretical foundations to deploying cutting-edge LLMs, making it an indispensable course for anyone interested in becoming an LLM expert. Additionally, notebooks and articles are included to reinforce the concepts discussed in each section.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in Technology Management and a bachelor’s degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.