Modern large multimodal models (LMMs) can process not only text but also different types of data. Indeed, “a picture is worth a thousand words,” and this functionality can be crucial during the interaction with the real world. In this “weekend project,” I will use a free LLaVA (Large Language-and-Vision Assistant) model, a camera, and a speech synthesizer; we will make an AI assistant that can help people with vision impairments. In the same way as in previous parts, all components will run fully offline without any cloud cost.

Without further ado, let’s get into it!

Components

In this project, I will use several components:

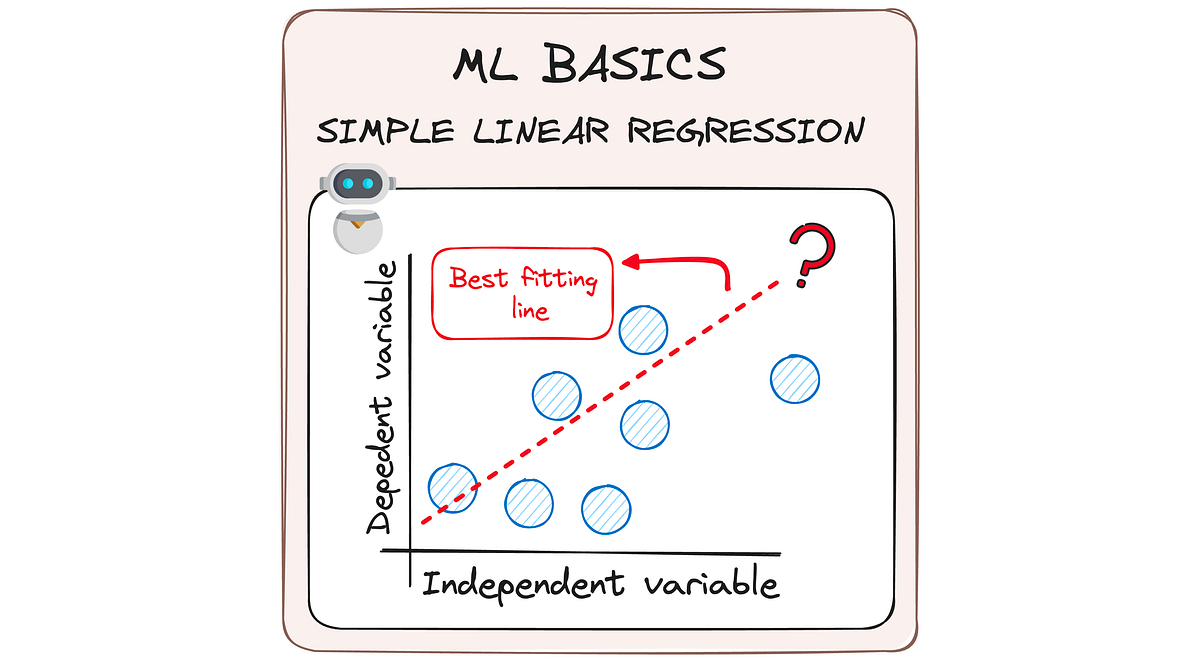

- A LLaVA model, which combines a large language model and a visual encoder with the help of a special projection matrix. This allows the model to understand not only text but also image prompts. I will be using the LlamaCpp library to run the model (despite its name, it can run not only LLaMA but LLaVA models as well).

- Streamlit Python library that allows us to make an interactive UI. Using the camera, we can take the image and ask the LMM different questions about it (for example, we can ask the model to describe the image).

- A TTS (text-to-speech) model will convert the LMM’s answer into speech, so a person with vision impairment can listen to it. For the text conversion, I will use an MMS-TTS (Massively Multilingual Speech TTS) model made by Facebook.

As promised, all listed components are free to use, don’t need any cloud API, and can work fully offline. From a hardware perspective, the model can run on any Windows or Linux laptop or tablet (an 8 GB GPU is recommended but not mandatory), and the UI can work in any browser, even on a smartphone.

Let’s get started.

LLaVA

LLaVA (Large Language-and-Vision Assistant) is an open-source large multimodal model that combines a vision encoder and an LLM for visual and language understanding. As was mentioned before, I’ll use a LlamaCpp to load the model. This…