Numerous challenges underlying human-robot interaction exist. One such challenge is enabling robots to display human-like expressive behaviors. Traditional rule-based methods need more scalability in new social contexts, while the need for extensive, specific datasets limits data-driven approaches. This limitation becomes pronounced as the variety of social interactions a robot might encounter increases, creating a demand for more adaptable, context-sensitive solutions in robotic behavior programming.

Research in generating socially acceptable robot and virtual human behaviors encompasses rule-based, template-based, and data-driven methods. Rule-based approaches rely on formalized rules but need expressivity and multimodal capabilities. Template-based methods derive interaction patterns from human traces yet also suffer from limited expressivity. Data-driven models, employing machine learning or generative models, are limited by data inefficiency and the need for specialized datasets. Large Language Models (LLMs) have shown promise in robotics for tasks like planning and reacting to feedback, enabling social reasoning and user preference inference.

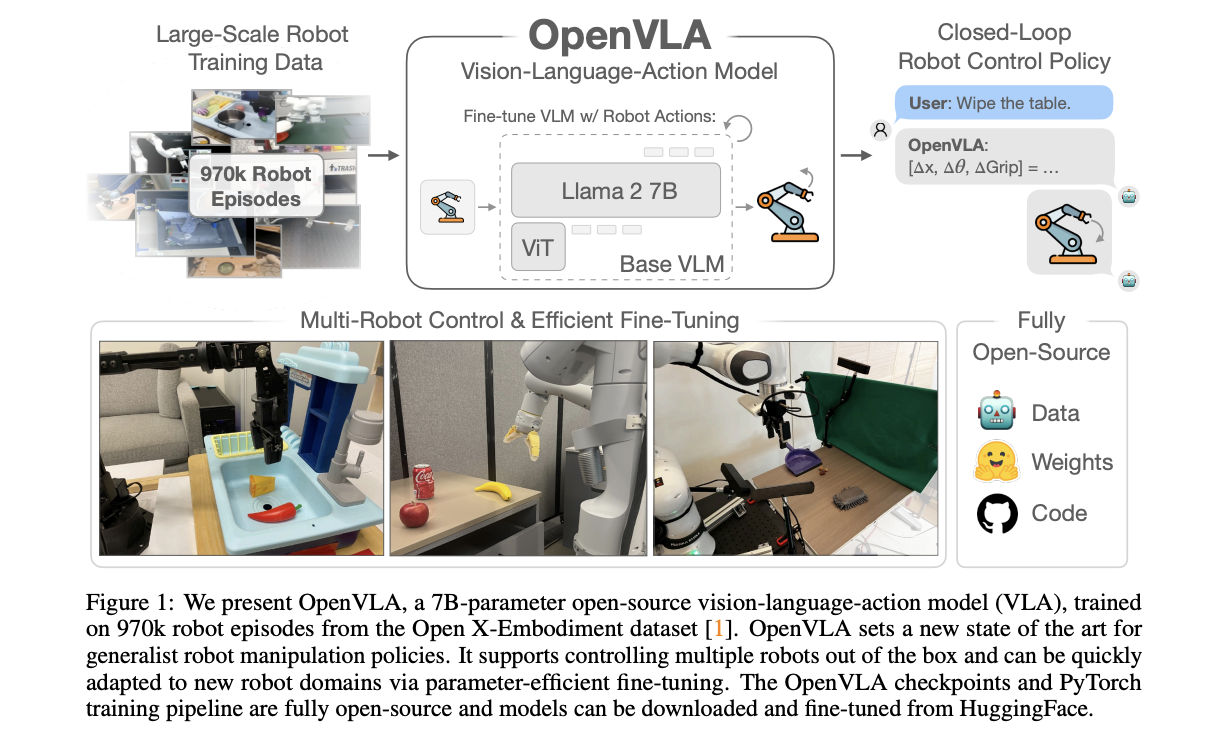

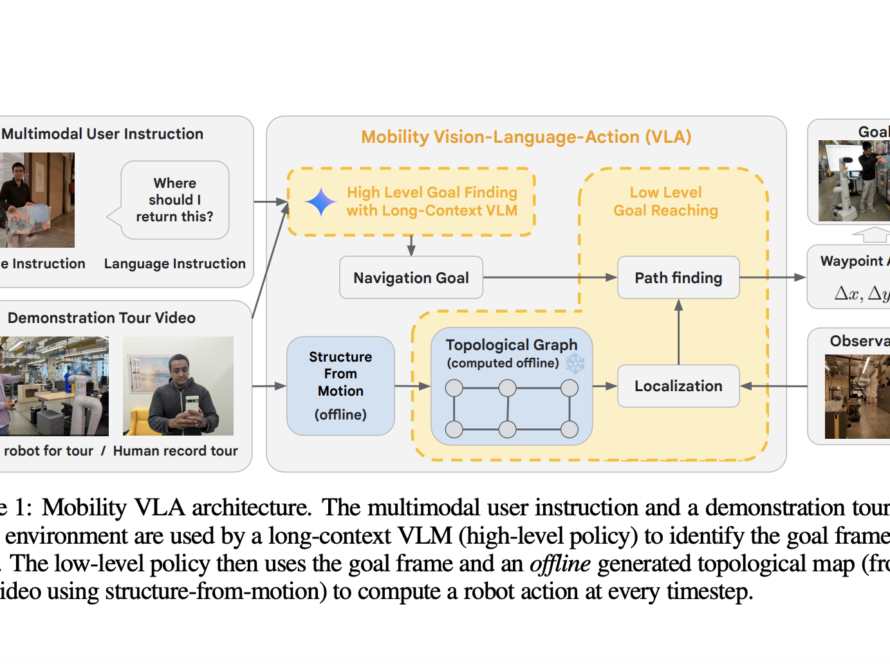

Researchers at Google Deepmind and the University of Toronto have proposed Generative Express Motion (GenEM), focusing on generating expressive robot behaviors using LLMs. The GenEM approach leverages the rich social context available from LLMs to create adaptable and composable graphic robot motion. It utilizes a few-shot chain-of-thought prompting to translate human language instructions into parameterized control code using the robot’s available and learned skills.

The behaviors are generated in multiple steps, starting from user instructions and ending with code for the robot to execute. Two user studies were considered to evaluate the approach, comparing the generated behaviors to those created by a professional animator. The method outperforms traditional rule-based and data-driven approaches, providing greater expressivity and adaptability in robotic behavior. Researchers also utilized user feedback to update the robot’s policy parameters and generate new expressive behaviors by composing existing ones.

The two user studies demonstrate GenEM’s effectiveness, showing that the generated behaviors are perceived as competent and understandable. Simulation experiments were conducted using a mobile robot and a simulated quadruped, indicating that the approach performed better than a version where language instructions were directly translated into code. It also allowed for the generation of behaviors agnostic to embodiment and composable.

In conclusion, the research on GenEM using LLMs marks a significant advancement in robotics, demonstrating the ability to generate expressive, adaptable, and composable robot behaviors autonomously. This approach showcases the potential of LLMs in robotics, emphasizing their role in facilitating effective human-robot interactions through the autonomous generation of expressive behaviors.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and Google News. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.