One of the more intriguing developments in the dynamic field of computer vision is the efficient processing of visual data, which is essential for applications ranging from automated image analysis to the development of intelligent systems. A pressing challenge in this area is interpreting complex visual information, particularly in reconstructing detailed images from partial data. Traditional methods have made strides, but the quest for more efficient and effective techniques is ongoing.

In visual data processing, self-supervised learning and generative modeling techniques have been at the forefront. While groundbreaking, these methods face limitations in handling complex visual tasks efficiently, especially in masked autoencoders (MAE). MAEs operate on the premise of reconstructing an image from a limited set of visible patches, which, while yielding significant insights, demands high computational resources due to the reliance on self-attention mechanisms.

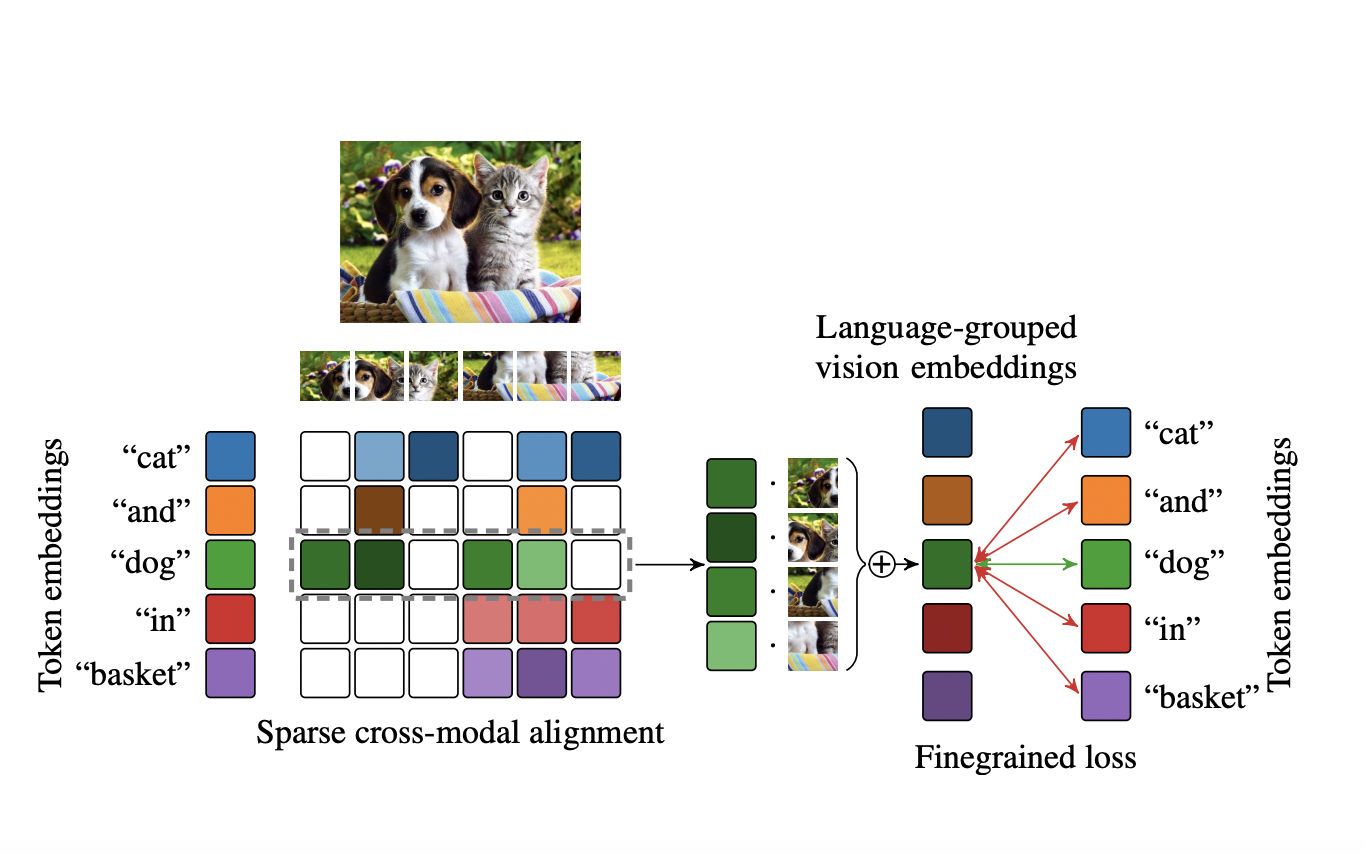

Researchers from UC Berkeley and UCSF have innovated with Cross-Attention Masked Autoencoders (CrossMAE) to address these challenges. This novel framework departs from the conventional MAE by utilizing cross-attention exclusively for decoding the masked patches. Traditional MAEs employ a mix of self-attention and cross-attention, leading to a more complex and computationally intensive process. CrossMAE streamlines this by focusing solely on cross-attention between visible and masked tokens, significantly simplifying and expediting the decoding process.

The crux of CrossMAE’s efficiency lies in its unique decoding mechanism, which leverages only cross-attention between masked and visible tokens. This method negates the necessity for self-attention within mask tokens, a significant shift from traditional MAE approaches. The decoder in CrossMAE is tailored to focus on decoding a subset of mask tokens, enabling faster processing and training. This modification does not compromise the integrity and quality of the reconstructed image or impact the performance in downstream tasks, showcasing the potential of CrossMAE as an efficient alternative to conventional methodologies.

CrossMAE’s performance in benchmark tests like ImageNet classification and COCO instance segmentation matched or outperformed the conventional MAE models. This was achieved with a substantial reduction in decoding computation. Moreover, the quality of image reconstruction and the effectiveness in performing downstream tasks remained unaltered, indicating CrossMAE’s capability to handle complex visual tasks with enhanced efficiency.

CrossMAE redefines the approach to masked autoencoders in computer vision. Focusing on cross-attention and adopting a partial reconstruction strategy paves the way for a more efficient method of handling visual data. This research has profound implications, indicating that even simple yet innovative changes in approach can yield significant improvements in computational efficiency and performance in complex tasks.

In conclusion, the introduction of CrossMAE in computer vision is a significant advancement. It reimagines the decoding mechanism of masked autoencoders and demonstrates a more efficient path for processing visual data. The research underlines the potential of CrossMAE as a groundbreaking alternative, offering a blend of efficiency and effectiveness that could redefine approaches in computer vision and beyond.

Check out the Paper, Project, and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our Telegram Channel

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.