With the increase in the popularity and use cases of Artificial Intelligence, Imitation learning (IL) has shown to be a successful technique for teaching neural network-based visuomotor strategies to perform intricate manipulation tasks. The problem of building robots that can do a wide variety of manipulation tasks has long plagued the robotics community. Robots face a variety of environmental elements in real-world circumstances, including shifting camera views, changing backgrounds, and the appearance of new object instances. These perception differences have frequently been shown to be obstacles to conventional robotics methods.

Improving the robustness and adaptability of IL algorithms to environmental variables is critical in order to utilise their capabilities. Previous research has shown that even little visual changes in the environment, including backdrop colour changes, camera viewpoint alterations, or the addition of new object instances, can have an impact on end-to-end learning policies, as a result of which, IL policies are usually assessed in controlled circumstances using cameras that are calibrated correctly and fixed backgrounds.

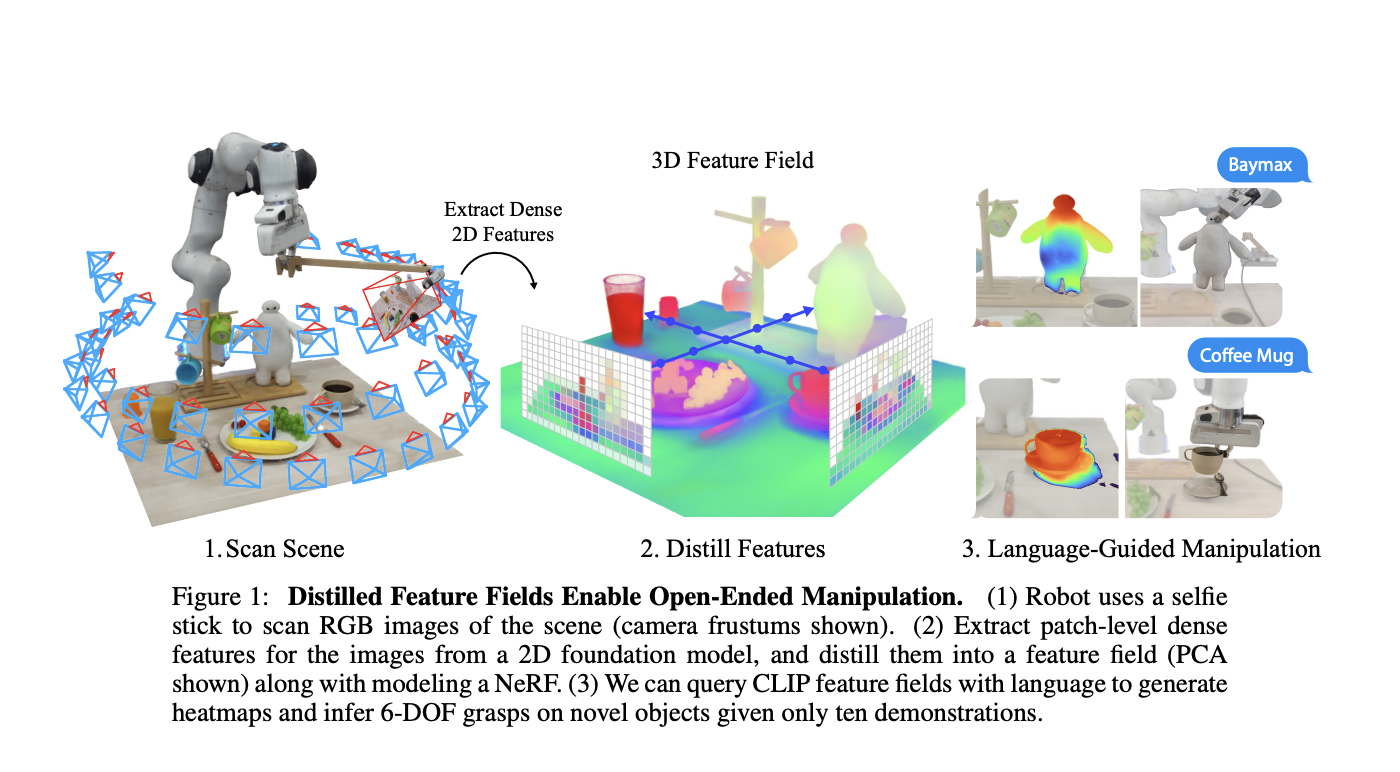

Recently, a team of researchers from The University of Texas at Austin and Sony AI has introduced GROOT, a unique imitation learning technique that builds strong policies for manipulation tasks involving vision. It tackles the problem of allowing robots to function well in real-world settings, where there are frequent changes in background, camera viewpoint, and object introduction, among other perceptual alterations. In order to overcome these obstacles, GROOT focuses on building object-centric 3D representations and reasoning over them using a transformer-based strategy and also proposes a connection model for segmentation, which allows rules to generalise to new objects in testing.

The development of object-centric 3D representations is the core of GROOT’s innovation. The purpose of these representations is to direct the robot’s perception, help it concentrate on task-relevant elements, and help it block out visual distractions. GROOT gives the robot a strong framework for decision-making by thinking in three dimensions, which provides it with a more intuitive grasp of the environment. GROOT uses a transformer-based approach to reason over these object-centric 3D representations. It is able to efficiently analyse the 3D representations and make judgements and is a significant step towards giving robots more sophisticated cognitive capabilities.

GROOT has the ability to generalise outside of the initial training settings and is good at adjusting to various backgrounds, camera angles, and the presence of items that haven’t been observed before, whereas many robotic learning techniques are inflexible and have trouble in such settings. GROOT is an exceptional solution to the intricate problems that robots encounter in the actual world because of its exceptional generalisation potential.

GROOT has been tested by the team through a number of extensive studies. These tests thoroughly assess GROOT’s capabilities in both simulated and real-world settings. It has been shown to perform exceptionally well in simulated situations, especially when perceptual differences are present. It outperforms the most recent techniques, such as object proposal-based tactics and end-to-end learning methodologies.

In conclusion, in the area of robotic vision and learning, GROOT is a major advancement. Its emphasis on robustness, adaptability, and generalisation in real-world scenarios can make numerous applications possible. GROOT has addressed the problems of robust robotic manipulation in a dynamic world and has led to robots functioning well and seamlessly in complicated and dynamic environments.

Check out the Paper, Github, and Project. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on WhatsApp. Join our AI Channel on Whatsapp..

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.